Hot Path Analytics with CosmosDB and Azure Stream Analytics

The Internet of Things (IoT) has opened up a new, virtually inexhaustible source of technical innovations, which are equally valuable for a broad variety of industries. Applying smart connected devices, sensors, and gateways to control each part of the production process, manufacturing, and infrastructure companies are dramatically increasing their operational efficiency. At the same time, the consumer tech market has exploded with an abundance of smart products and spin-off cloud services that they brought to life. In this article, we are going to look at one of the IoT use cases on how to perform Hot Path Analytics with CosmosDB and Azure Stream Analytics.

According to lambda architecture, there are two paths for data to flow in the analytics pipeline

- A “hot” path where latency-sensitive data, results need to be ready in seconds or less and it flows for rapid consumption by analytics clients.

- A “cold” path where all data goes and is processed in batches that can tolerate greater latencies until results are ready.

Quick Snapshot

Why Azure Cosmos DB?

Azure Cosmos DB is Microsoft’s globally distributed, multi-model database. With the click of a button, it enables you to elastically and independently scale throughput and storage across any number of Azure’s geographic regions. It offers throughput, latency, availability, and consistency guarantees with comprehensive service level agreements (SLAs), something no other database service can offer.

You can Try Azure Cosmos DB for free without an Azure subscription, free of charge and commitments.

As a globally distributed database service, Azure Cosmos DB provides the following capabilities to help you build scalable, highly responsive applications:

Key Capabilities

- Turnkey global distribution – You can distribute your data to any number of Azure regions, with the click of a button. This enables you to put your data where your users are, ensuring the lowest possible latency to your customers.

- Multiple data models and popular APIs for accessing and querying data – APIs for the following data models are supported with SDKs available in multiple languages:

- DocumentDB API: A schema-less JSON database engine with SQL querying capabilities.

- MongoDB API: A MongoDB database service built on top of Cosmos DB.

- Table API: A key-value database service built to provide premium capabilities for Azure Table storage applications.

- Graph (Gremlin) API: A graph database service built following the Apache TinkerPop specification.

- Cassandra API: A key/value store built on the Apache Cassandra implementation.

- Elastically scale throughput and storage on demand, worldwide

- Support for highly responsive and mission-critical applications

- “always on” availability

- No database schema/index management

In the next section, we are going to look at how to

- Create a device

- Connect the Pi Simulator to IoT Hub

- Send telemetry data to Azure

- Setup Cosmos DB

- Setup Streaming analytics Job with windowing techniques and

- Explore the Streaming data

#1.Create a device

We will be using the Azure IoT Hub service to connect the device simulator and start sending data. Azure IoT Hub is a fully managed service that enables reliable and secure bidirectional communications between millions of IoT devices and a solution back end.

With IoT Hub you can:

- Establish bi-directional communication with billions of IoT devices

- Authenticate per device for security-enhanced IoT solutions

- Register devices at scale with IoT Hub Device Provisioning Service

- Manage your IoT devices at scale with device management

- Extend the power of the cloud to your edge device

To start, click on Create a resource and click on the Internet of Things.

Create an IoT Hub to connect your real device or simulator.

Use an existing resource group or create a new one. In the Name field, enter a unique name for your IoT hub. The name of your IoT Hub must be unique across all IoT hubs.

In the Tier filed, select S1 tier. You can choose from several tiers depending on how many features you want and how many messages you send through your solution per day.

For details about the tier options, check out Choosing the right IoT Hub tier.

Now that IoT Hub is created, we would be connecting Devices in the next steps.

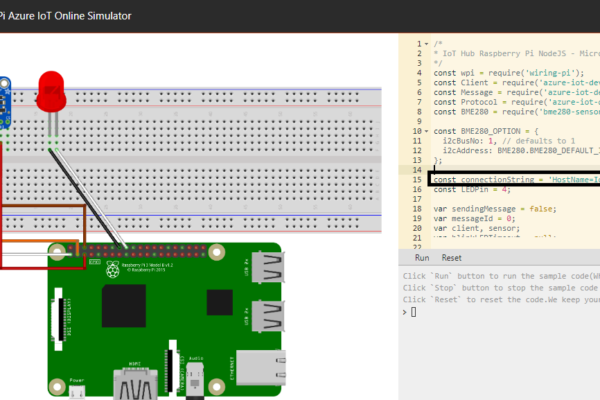

#2.Connect the simulator to IoT Hub

Go To your IoT Hub in the portal and click on IoT Devices, Click on+ Add and enter a Device and click Save.

Once the device is created, click on the device and copy the Primary Connection String.

Go to PI Simulator and replace the connection string with the Primary Connection String copied in the previous step.

Click Run and start sending messages. LED will start blinking, Messages will start flowing into IoT Hub that we created in the previous step.

#3.Create Cosmos DB account

To start with, click on Create a resource and click on the Azure Cosmos DB.

Follow the wizard to create a new database, for this tutorial, I have chosen SQL API which is a schema-less JSON database engine with rich SQL querying capabilities. There are also other APIs available that might be suitable for your scenario.

#3.Create Stream Analytics Job

Azure Stream Analytics is a managed event-processing engine set up real-time analytic computations on streaming data. The data can come from devices, sensors, websites, social media feeds, applications, infrastructure systems, and more.

From the Azure Portal, click on Create a resource and on the Stream Analytics Job.

Stream Analytics job can be created to run on the cloud as well as on the Edge. But for this tutorial, we are going to create it on the cloud.

Our next step is to add input to the job:

Add Cosmos DB as Output for Streaming Job (from the Outputs option on the left pane)

You will have to Authorize Cosmos DB connection for Stream analytics to have access to be able to write to Cosmos DB. Once Cosmos DB output is added, we have to add Query and Start the job.

Edit Query for Streaming Job:

Everything is ready, start the stream job which will read data from IoTHub and store data in Cosmos DB.

#4.Explore Streaming Data

Use Cosmos DB data explorer to view data being streamed from IoTHub to Cosmos DB.

From here it flows for rapid consumption by analytics clients.

Congrats! In this article, we have learned how to how to perform Hot Path Analytics with CosmosDB and Azure Stream Analytics.

Like this post? Don’t forget to share it!

Additional Resources :

- 12 Open Source IoT Platforms that you should know

- Visualize IoT-scale time-series data using Azure Time Series Insights

- Build real-time Twitter dashboard using Azure LogicApps + Power BI

- Serverless tutorial – On HTTP trigger store data in Azure Cosmos DB

- Running Kubernetes on Microsoft Azure

Average Rating